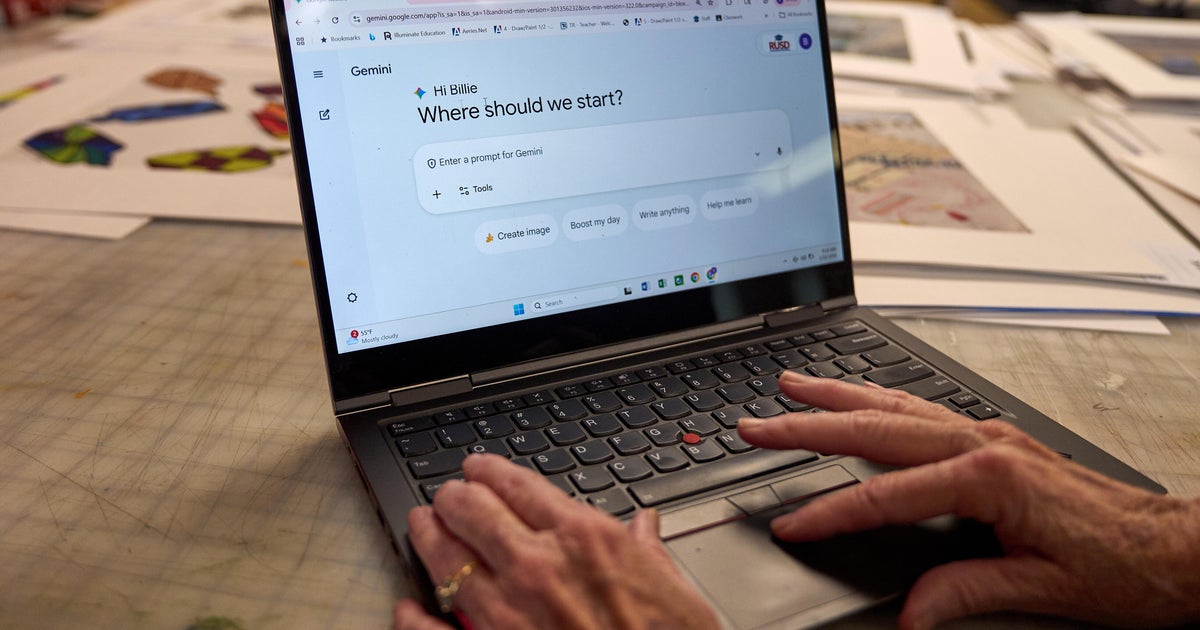

Psychiatrist urges caution when turning to AI for mental health help

BROOKLYN PARK, Minn. — In an emerging world of artificial intelligence, we've got access to mental health tools with the touch of our fingertips.

"It's exciting, it's novel, it really does have a lot of opportunity," said Dr. Brent Nelson, adult interventional psychiatrist and chief medical information officer at PrairieCare. "But there's the flip side, too."

Nelson puts it simply: with great power comes great responsibility. It's a mentality he keeps when it comes to interacting with artificial intelligence. Specifically conversational AI like ChatGPT or the "My AI" feature on Snapchat.

"The cases where people are really leaning into it as being a source of truth or, you know, an artificial intelligence friend, it can be challenging. In the early days it was downright dangerous," he said.

Nelson says there are more safeguards in place to keep that from happening these days. Last summer, "Tessa," an AI-based app meant to help people struggling with eating disorders, was taken down after it gave some bad advice.

"What it is at the end of the day, it's just a big, complicated hunk of math and it's going to give you outputs that are just based on how it was trained or what it found on the internet before," he said. "You really have to be thoughtful about questioning information it's giving you because sometimes it will actually give you things that are false. They call that 'hallucinating.'"

Some providers say AI-powered chatbots can help fill gaps amid a shortage of therapists and growing demand from patients. Nelson says there's not enough research quite yet to see AI's impact.

"I think at some point we're going to have clearer sense on where it might help with disparities, and particularly finding enough providers fulfilling all of the need that is out in the community, but where just not quite there yet," he said.

He hears from patients all the time about using AI for mental health purposes. He says it can be a great resource for patients, but should not be treated as a solution.

"As soon as you start to pivot into like, 'I'm going to use this to change my care, I'm going to use this to drive a health care decision, a mental health decision,' that's when you really want to have an expert, a professional involved," he said.

Nelson says this is especially true for teens and kids using the tool. The biggest question he gets from parents is "how much should I control?" To that, he says all families are different – but it is a balance parents should get involved in.

"Knowing what the kids are using, why they are using it, what are the implications, how does it make them feel, you know, it gives you a lot of information of whether to get an expert involved," he said.

Mental Health Resources

If you or someone you know is in crisis, get help from the Suicide and Crisis Lifeline by calling or texting 988.

In addition, help is available from the National Alliance on Mental Illness, or NAMI. Call the NAMI Helpline at 800-950-6264 or text "HelpLine" to 62640. There are more than 600 local NAMI organizations and affiliates across the country, many of which offer free support and education programs.