How new software can describe photos with almost human accuracy

If you've ever searched for an image online -- whether through a search engine or on a dedicated photo site -- you've likely be thwarted at one time or another by poorly captioned pictures that make finding what you want seem virtually impossible.

Researchers are now developing software capable of recognizing what's in a photograph and describing it in text, an ability that could make getting the right image much faster and easier.

Projects at Google and at Stanford University have combined the logic involved in translating text from one language to another with photo recognition engines in order to extract information from an image and automatically generate a summary that, in some cases, is just as clear and accurate as what a human would write.

First, a photo is run through a Convolutional Neural Network (CNN), which can identify individual objects. It is this kind of network that powers the facial recognition software which helps you tag your friends on Facebook. The characterization formed by the CNN is then fed into a language-generating Recurrent Neural Network (RNN), which is the brains behind machine translation from one language to another. The RNN spits out text reflecting the information outlined by the CNN -- a perfect caption.

Or a not-so-perfect one.

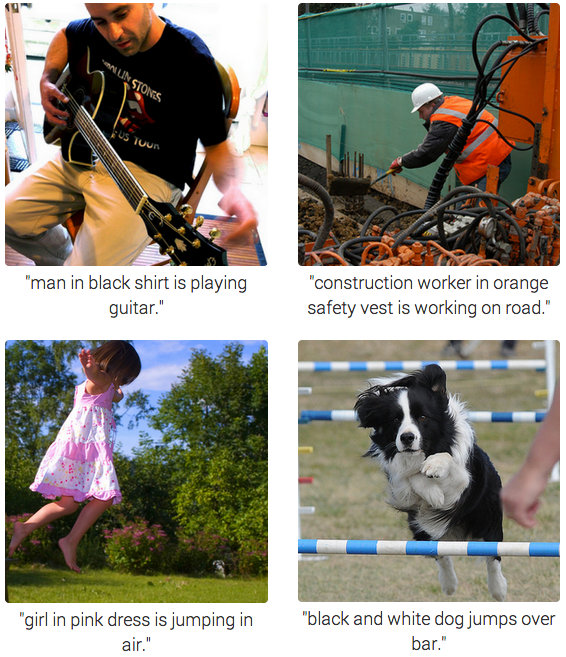

This is a learning system, which means that it improves as it processes more and more images. Sometimes, as these examples from Stanford show, it gets it right:

Sometimes, it's close:

And sometimes, as in this example from Google's Research Blog, the results can be way off:

There's a science and an art to describing something visual in words, and Google is trying to improve both. In their research paper, the Google engineers wrote, "Indeed, a description must capture not only the objects contained in an image, but it also must express how these objects relate to each other as well as their attributes and the activities they are involved in."

Enabling a computer to meaningfully translate images into words could be used for broader applications beyond improving the accuracy of search, including helping self-driving cars interpret traffic and obstacles, or creating robotic cameras that can precisely describe what they see in front of them.