Facebook knew Instagram was pushing girls to dangerous content: internal document

A previously unpublished internal document reveals Facebook, now known as Meta, knew Instagram was pushing girls to dangerous content.

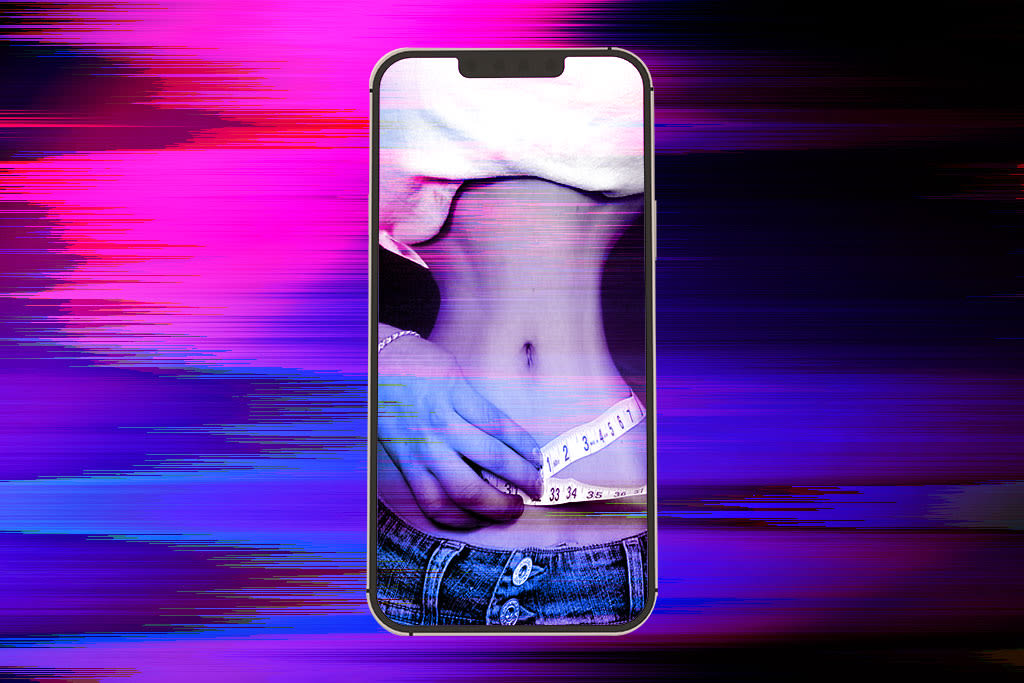

In 2021, according to the document, an Instagram employee ran an internal investigation on eating disorders by opening a false account as a 13-year-old girl looking for diet tips. She was led to graphic content and recommendations to follow accounts titled "skinny binge" and "apple core anorexic."

Other internal memos show Facebook employees raising concerns about company research that revealed Instagram made 1-in-3 teen girls feel worse about their bodies, and that teens who used the app felt higher rates of anxiety and depression.

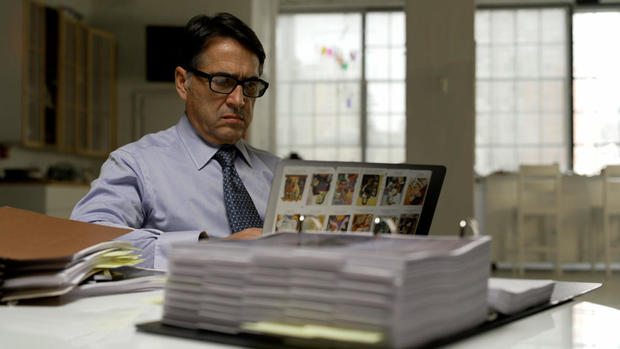

Attorney Matt Bergman started the Social Media Victims Law Center after reading the so-called "Facebook Papers," disclosed by whistleblower Frances Haugen last year. He's now working with more than 1,200 families who are pursuing lawsuits against social media companies. Next year, Bergman and his team will start the discovery process for the consolidated federal cases against Meta and other companies in multimillion dollar lawsuits that he says are more about changing policy than financial compensation.

"Time after time, when they have an opportunity to choose between safety of our kids and profits, they always choose profits," Bergman told 60 Minutes correspondent Sharyn Alfonsi.

Bergman spent 25 years as a product liability attorney specializing in asbestos and mesothelioma cases. He argues the design of social media platforms is ultimately hurting kids.

"They have intentionally designed a product-- that is addictive," Bergman said. "They understand that if children stay online, they make more money. It doesn't matter how harmful the material is."

"So, the fact that these kids ended up seeing the things that they saw, that were so disturbing," Alfonsi asked, "wasn't by accident; it was by design?"

"Absolutely," Bergman said. "This is not a coincidence."

- Teen watched simulated hanging video on Instagram before suicide

- More than 1,200 families suing social media companies over kids' mental health

- Meet the teens lobbying to regulate social media

- Resources for adolescent and family mental health support

Bergman argues the apps were explicitly designed to evade parental authority and is calling for better age and identity verification protocols.

"That technology exists," Bergman said. "If people are trying to hook up on Tinder, there's technology to make sure the people are who they say they are.

Bergman also wants to do away with algorithms that drive content to users.

"There's no reason why Alexis Spence, who was interested in exercise, should have been directed to anorexic content," Bergman said. "Number three would be warnings so that parents know what's going on. Let's be realistic, you're never going to have social media platforms be 100% safe. But these changes would make them safer."

Meta, the parent company of Facebook and Instagram, declined 60 Minutes' request for an interview, but its global head of safety Antigone Davis said, "we want teens to be safe online" and that Instagram doesn't "allow content promoting self-harm or eating disorders." Davis also said Meta has improved Instagram's "age verification technology."

But when 60 Minutes ran a test two months ago, a producer was able to lie about her age and sign up for Instagram as a 13-year-old with no verifications. 60 Minutes was also able to search for skinny and harmful content. And while a prompt came up asking if the user wanted help, we instead clicked "see posts" and easily found content promoting anorexia and self-harm.