What's next in artificial intelligence according to a tech visionary who may hold the cards to our future

Artificial general intelligence, in which computers have human-level cognitive abilities, is just five to 10 years away, Google DeepMind CEO Demis Hassabis predicted in an interview with 60 Minutes.

AI is on track to understand the world in nuanced ways, to be embedded in everyday lives and to not just solve important problems, but also develop a sense of imagination as companies race to advance the technology, Hassabis said.

"It's moving incredibly fast," Hassabis said. "I think we are on some kind of exponential curve of improvement. Of course, the success of the field in the last few years has attracted even more attention, more resources, more talent. So that's adding to the, to this exponential progress."

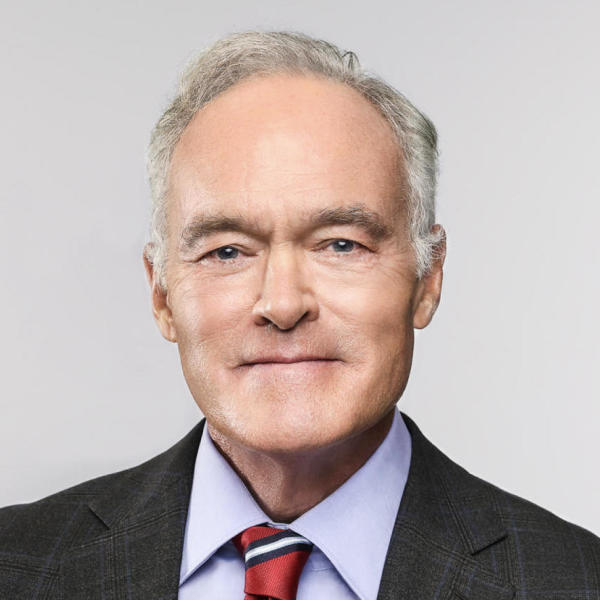

Demis Hassabis and AI

Hassabis, who co-founded DeepMind, is one of the early pioneers of AI. Google acquired DeepMind in 2014.

"What's always guided me and, and the passion I've always had is understanding the world around us," Hassabis said. "That's what's driven me in my career. I've always been, since I was a kid, fascinated by the biggest questions. You know, the meaning of life, the nature of consciousness, the nature of reality itself."

To find answers to those questions, Hassabis studied at Cambridge, MIT and Harvard. He's a computer scientist with a doctorate in neuroscience.

"I've loved reading about all the great scientists who worked on these problems and the philosophers, and I wanted to see if we could advance human knowledge," Hassabis said. "And for me, my expression of doing that was to build what I think is the ultimate tool for advancing human knowledge, which is AI."

His work on AI led to a Nobel Prize. The 48-year-old British scientist had worked with colleague John Jumper to create an AI model that predicted the structure of proteins. Mapping one protein could take years.. DeepMind's AI model predicted the structure of 200 million proteins in one year.

What's next for AI

AI has progressed incredibly quickly in recent years, Hassabis said. It's challenging to keep up with the advances, even for people in the field.

Part of that progress includes DeepMind's Project Astra, an AI companion of sorts that can see, hear and chat about anything known to humanity. Astra is part of a new generation of chatbots, able to interpret the world with its own "eyes." Correspondent Scott Pelley tested Astra by showing the AI app artworks selected by 60 Minutes. Astra identified the paintings and answered questions about them. The AI even created a story about "Automat" by Edward Hopper.

"It's a chilly evening in the city. A Tuesday, perhaps. The woman, perhaps named Eleanor, sits alone in the diner," Astra said. "She is feeling melancholy due to the uncertainty of her future and the weight of unfulfilled dreams."

Hassabis said he's often surprised by what AI systems can do and say because the programs are sent out onto the internet for months to learn for themselves, then can return with unexpected skills.

"We have theories about what kinds of capabilities these systems will have. That's obviously what we try to build into the architectures," he said. "But at the end of the day, how it learns, what it picks up from the data, is part of the training of these systems. We don't program that in. It learns like a human being would learn. So new capabilities or properties can emerge from that training situation."

Now Google DeepMind is training its AI model "Gemini" to act in the world, like booking tickets or ordering online.

Robotics will also be part of advancing AI, leading to machines that can understand the world around them, reason through instructions and follow them to completion.

"I think it will have a breakthrough moment in the next couple of years where we'll have demonstrations of maybe humanoid robots or other types of robots that can start really doing useful things," Hassabis said.

Is AI self-aware and able to imagine?

None of the AI systems out there today feel self-aware or conscious in any way, Hassabis said. Self-awareness is a possibility down the line, but it's not an explicit goal for Hassabis.

"My advice would be to build intelligent tools first and then use them to help us advance neuroscience before we cross the threshold of thinking about things like self-awareness," he said.

Hassabis also says AI is lacking in imagination.

"I think that's getting at the idea of what's still missing from these systems," Hassabis said. "They're still the kind of, you can still think of them as the average of all the human knowledge that's out there. That's what they've learned on. They still can't really yet go beyond asking a new novel question or a new novel conjecture or coming up with a new hypothesis that has not been thought of before."

Benefits, risks of AI

While the technology rapidly develops, Hassabis sees the potential for enormous benefits. With the help of AI, Hassabis said he believes the end of disease could be within reach in the next decade. Hassabis has AI blazing through solutions to drug development.

"So on average, it takes, you know, 10 years and billions of dollars to design just one drug," Hassabis said. "We can maybe reduce that down from years to maybe months or maybe even weeks."

He also believes AI could lead to "radical abundance," the elimination of scarcity.

Hassabis also feels AI needs guardrails- safety limits built into the system. One of his main fears is that bad actors may use AI for harmful ends, something that's already happening with some AI systems. Another concern is ensuring control of AI as the systems become more autonomous and more powerful. As technology companies compete for AI dominance, there's also a concern that safety may not be a priority.

Making sure safety limits are built into AI requires leading players and countries to coordinate, he said. AI systems can also, Hassabis believes, be taught morality.

"They learn by demonstration. They learn by teaching," Hassabis said. "And I think that's one of the things we have to do with these systems, is to give them a value system and a guidance, and some guardrails around that, much in the way that you would teach a child."