Minnesota House panel revives discussion about bill prohibiting social media algorithms targeting teens

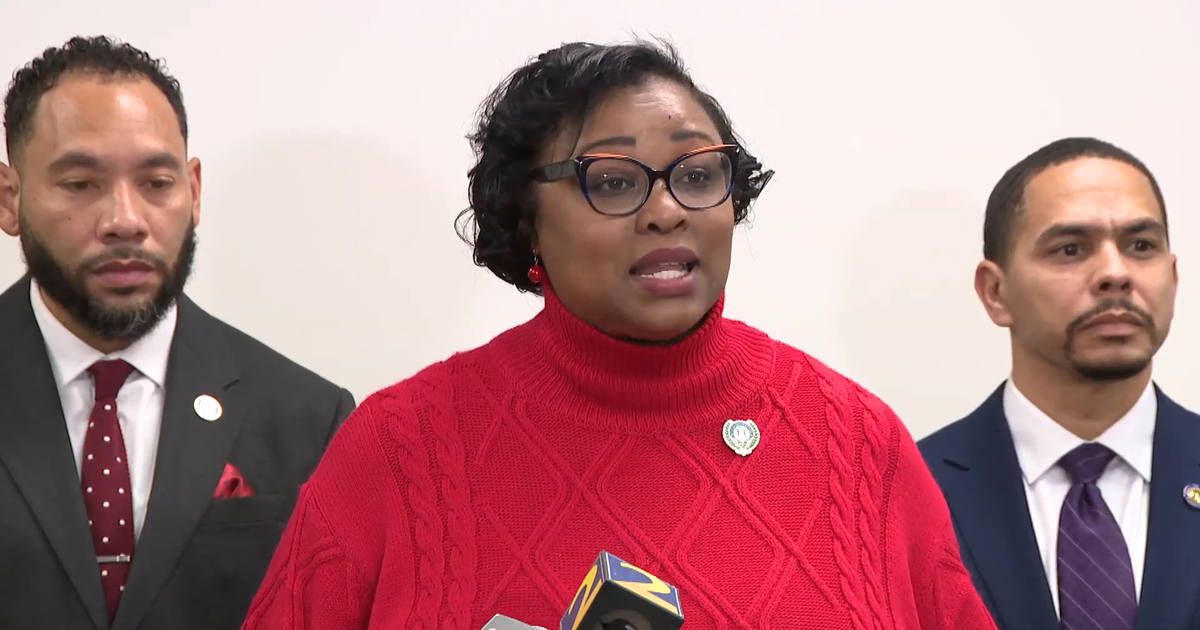

ST. PAUL, Minn. -- A Minnesota House panel on Wednesday advanced a proposal designed to curb the harmful impacts social media can have on children by restricting the use of algorithms for users under the age of 18.

It's a revived effort at the capitol this year at a time when technology companies and platforms like TikTok are under intense scrutiny. The legislation, which has bipartisan support, forbids a social media platform in Minnesota with a million or more users from targeting kids and teens with algorithms, which curate content that it determines a user may like or interact with. There'd be a penalty of $1,000 per violation should the bill pass.

For children to even open an account on a social media website or app, parental consent would be required.

Rep. Kristin Robbins, R-Maple Grove, initially introduced the bill last year after reading a Wall Street Journal report about how TikTok flooding teens' feeds with eating disorder videos. The video-sharing app said it adjusted its algorithm after the story was published.

Robbins told a panel of lawmakers on the House Commerce Committee on Wednesday that the legislation includes feedback she heard last year and is "narrowly targeted at the one issue of these social media companies shoveling things our kids have not liked or followed—unsolicited content—to them."

Supporters of the measure said the bill would be a good step forward in limiting vulnerable children's exposure to content that is harmful to their mental or physical health. Republicans and Democrats have signed on as co-authors.

"Our youngest kids are exposed to media messages at rates that most of us in this room can't comprehend," said Lisa Radzak, executive director of nonprofit WithAll, a nonprofit that focuses on eating disorder treatment and prevention. "We had to wait about a month for the magazine to come in the mail that maybe had some of the harmful messages in it. Or kids today are being saturated with this, like as though they're being under a firehose."

A recent study from the American Psychological Association found that young adults who reduced their social media screen time by 50% for just a few weeks saw significant improvements in how they viewed their weight and overall appearance.

Some trade groups representing technology companies raised concern about implementing age verification requirements and argued the bill is too broad.

Jeff Tollefson, president of the Minnesota Technology Association, warned that the language could extend beyond social media companies and impact major retailers leveraging the internet for its products.

"We're not in any way against protecting children from targeted content related to issues such as eating disorders, self-harm, smoking, drinking, etc," Tollefson said. "But we do not believe that this overly broad and vague bill, coupled with the private right of action, is the answer. We support the bill's intent to keep children safe online, but House File 1503 in its current form creates more questions than answers."

The legislation doesn't cover algorithms for internet search providers, e-mail or video streaming services like Netflix.

This hearing comes as these social media algorithms and the legal liability of tech companies when it comes to content published on their platforms are at the center of a case before the U.S. Supreme Court.

William McGeveran, a law professor at the University of Minnesota who specializes in internet privacy and First Amendment law, told WCCO last year that states across the U.S. and Congress are introducing dozens of policies to regulate or break up big tech companies.

One proposal in the U.S. Senate called the "Kids Online Safety Act" stated platforms have a "duty to prevent and mitigate the heightened risks of physical, emotional, developmental, or material harms to minors" from certain content. There was also language for an "opt-out" for algorithms that use a child's data.

Some rules, McGeveran said, could pass the First Amendment test, depending on how they are written. He noted the Minnesota House proposal is the only one so far targeting algorithms.

"It's much less likely to be a be a first amendment problem if it regulates how you do it instead of what you say," McGeveran said. "So a rule that says algorithms can be used or can't has a better chance than a rule that says this is the speech that's harmful get rid of it."