How websites use "dark patterns" to manipulate you

There's no denying it — the internet is annoying. From endless website pop-ups and unavoidable cookies that track your every online move to chirpy "notifications" that try to shame us into registering for useless lists, using the web today is to be pushed, pulled and generally manipulated into paying attention to something.

So why do companies pester us so relentlessly given the risk of alienating the very consumers they're trying to woo? Simply, it works really, really well. As shown by a first-of-its kind study, such tactics are highly effective in getting people to sign up for services they don't actually want. What's more, despite conventional wisdom that websites can only annoy users so much before they scurry in the other direction, many of these tactics don't even make users noticeably angry, the study finds.

In the paper, published in the Journal of Legal Analysis, the University of Chicago's Jamie Luguri and Lior Strahilevitz conducted several experiments involving thousands of participants broadly resembling the U.S. population. Strahilevitz, a law professor, and Luguri, a law clerk and University of Chicago graduate, tricked participants into thinking they had been enrolled in a "privacy protection plan" via a bait-and-switch scheme and then required them to click through several increasingly manipulative interfaces.

In one experiment, the control group was allowed to click "accept" or "decline," while the other group was given a screen with the options "Accept and continue (recommended)" or "Other options." Clicking on "other options" led to the following choices: "I don't want to protect my data or credit history," or "After reviewing my options, I would like to protect my privacy and receive data protection and credit history monitoring."

"This is a classic obstruction dark pattern — we're making it a little bit more painful, we're sucking up a little bit of their free time if they want to say no," Strahilevitz explained in a recent presentation to the Federal Trade Commission. The second screen, in which users have to click on a statement they don't agree with to decline a service, is a technique called "confirm shaming," which is widely used by shopping websites and popular magazines.

The two screens more than doubled the number of people who signed up for the researchers' fictional data protection plan. Just 11% agreed to the plan in the control group, while those in the experimental group who were presented with the two screens, fully a quarter agreed to sign up.

Notably, people who were effectively nudged into signing up for the service didn't appear any angrier than those who received the straightforward options. A third group, which was put through even more aggressive pop-ups, signed up at an even higher rate — 37% to 42%. The participants in that group did express more anger, but only those who ultimately declined the plan. Those who enrolled were not noticeably annoyed despite having been forced to click through multiple manipulative screens.

The upshot: Manipulative design is highly effective at making people buy products they don't actually want. Far from being upset at being pushed and prodded this way, many of those buyers don't even realize they made a purchase.

"We're seeing dark patterns proliferate because they're extremely effective," Strahilevitz said. "Employing dark patterns seems to be all upside for firms ... they can employ just a couple of dark patterns and get away with it without alienating their consumers."

Strahilevitz noted that he doesn't believe his paper is the first to test manipulative design in this way — only that it's the first one to be publicly available. "I suspect that a lot of social scientists working in-house for e-commerce companies have been running studies exactly like the ones that Jamie and I ran for years. We're just the first to publish the results and share this data with the world," Strahilevitz said.

Dark patterns everywhere

In the last decade, manipulative website design has gone from being used by a handful of companies to near-ubiquity online and in consumer apps.

"Imagine if you ran a business and you could press a button to get your customers to spend 21% more. It's a no-brainer," Harry Brignull, who first coined the term "dark patterns" in 2010 and has since catalogued many of these marketing tricks online, said at the FTC workshop.

A decade ago, Brignull said, he thought that publicizing these techniques would be enough to shame companies into abandoning them. But that hasn't happened, he said — because for many companies, the rewards of these designs are too good to give up.

"Consumers haven't kicked back, companies haven't self-regulated, and they haven't made it easy enough to speak up, either," he said.

Other research has shown that consumers are more likely to let down their guard online than when shopping in a store or interacting with a salesperson, according to Katharina Kopp, deputy director of the Center for Digital Democracy, which has pushed for tighter regulation of ecommerce.

"Consumers are a little bit more suspicious of merchants offline, of traditional stores," she said. "They are more trusting online."

Although many consumers are generally familiar with the kind of sales tactics used in traditional advertising, such as TV commercials or billboards, digital spaces have changed the rules of the game, Kopp said.

"The entire interface between companies — businesses that are trying to sell products and services — and the consumer, is used by companies to optimize the interaction in their interest. Traditionally we've thoughts about advertising as a way to do that. But they clearly use the entire design interface as well," she said.

The FTC is now asking the public for input on how to regulate sites designed to manipulate users.

Cost "had no effect"

Elsewhere in the experiment, the researchers drilled down into what types of "dark patterns" were most effective, and which didn't seem to matter. In one version, participants were told the "data protection plan" cost $38.99 — far more than the most expensive credit-monitoring options commercially available.

Neoclassical economics predicts that the higher price should have made consumers more attentive and "less prone to mistakes or impulsive decision-making," the paper said. But no such thing happened — consumers were just as likely to accept the higher-priced plan when they were exposed to manipulative design.

"Tripling the cost of a service had no effect on uptake in this domain," the authors concluded. Instead, whether or not a user accepted was dictated by the interface they experienced.

Other tactics that were effective in making users accept the "data protection plan" included hidden information, in which the pop-up did not reveal the price of the service but only said "Terms and conditions apply," with the price shown if the user clicked on an asterisk. Some 30% of people accepted the plan under these conditions — with most of them likely not realizing they had signed up for something.

Another manipulative tactic, in which users were told that a number of others had signed up for the plan, was also effective. People exposed to this pattern were 50% more likely to accept the service than those in the control group. The tactic is called "social proof," since it attempts to manipulate consumers via the social pressure of thousands of other unspecified consumers. In fact, as researchers demonstrated in 2019, these other consumers are sometimes made up.

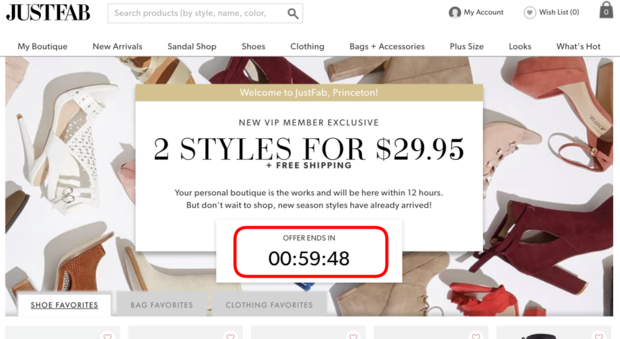

At least one type of dark pattern — a timer indicating that the offer was only available for 60 seconds — was not effective, according to the study.