Georgia Rep. Mike Collins' campaign uses AI-generated deepfake of Senator Jon Ossoff in tight Senate showdown

Nasty name-calling, ill-mannered insults, and menacing mudslinging have been a part of American politics for centuries. In this new age of no-holds-barred tactics, the barbs are becoming more brutal, and the ads, well, anything goes when it comes to edging out the competition, even if the attacks are artificial.

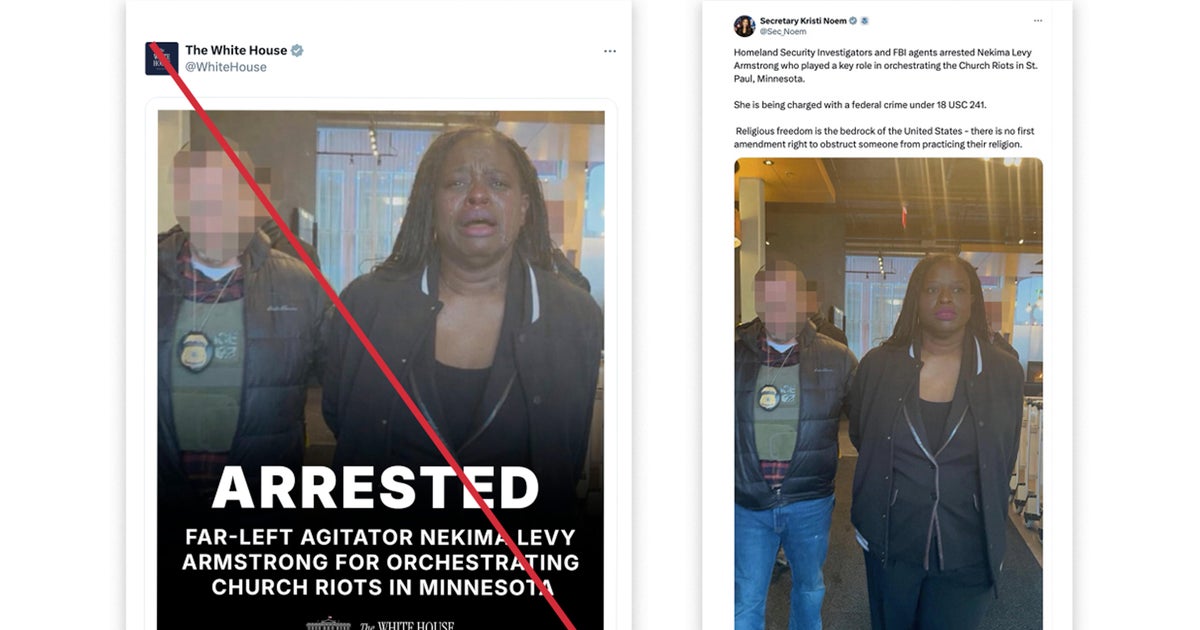

A new political ad in Georgia's U.S. Senate race is raising concerns about the use of artificial intelligence in elections after Rep. Mike Collins' campaign released a deepfake video showing Sen. Jon Ossoff mocking farmers and defending a government shutdown.

Ossoff never said any of it.

The video, posted last week on social media, was created using artificial intelligence and features computer-generated audio of Ossoff claiming to support the shutdown and that he'd "only seen a farm on Instagram." The ad includes a small on-screen disclaimer that says "the video is AI-generated," so that it doesn't violate Georgia or federal laws.

The ad has sparked a wider debate over the responsible use of emerging technologies in political persuasion, and what happens when the line between truth and fabrication starts to blur.

Campaigns clash over the ad

The Georgia Republican Party defended the video as legitimate political satire.

Josh McKoon, chair of the Georgia GOP, said the party "stands behind the creative use of cutting-edge technology" and accused Democrats of being "terrified of losing power."

"This ad was clearly labeled as AI-generated satire," McKoon told CBS News Atlanta in a statement. "Any claim that it's a deepfake meant to deceive is just the latest desperate talking point."

Ossoff's campaign disagreed, calling the ad an intentional attempt to mislead voters. The incumbent candidate has pledged not to use the technology during this election cycle.

In a statement, an Ossoff campaign spokesperson said, "The only reason a candidate would need to use a deepfake to make up an opponent's words is if they didn't think they could win on their own. Georgians don't take well to people who lie to them."

The Collins campaign said that it plans to continue using AI tools.

"As technology evolves and creates new opportunities to reach and communicate with voters, the Collins campaign will be at the forefront embracing new tactics and strategies that pierce through lopsided legacy media coverage and deliver our message directly to voters," the campaign said in a statement.

Local Democrats blasted the move. Devon Cruz, a senior communications advisor with the Democratic Party of Georgia said, "Instead of trying to deceive voters with deepfake videos, low-integrity Congressman Mike Collins should explain why he supports doubling Georgians' health insurance premiums."

Experts warn the public may struggle to tell what's real

Dr. Patrick Dicks, an AI and automation researcher and expert, told CBS News Atlanta that deepfakes are no longer a futuristic threat; they're here now, and campaigns are already deploying them.

"What we're seeing now, I told everybody was coming back in 2017," Dicks said. "You can create audio of an opponent that sounds exactly like them. People hear something once and assume it's true."

Dicks said the danger is simple: deepfakes can mislead voters into believing a candidate said or did something that never happened.

"You can sway voters on something that is not factual," he said. "People may vote for or against someone based on a completely fabricated video."

And because most AI-generated media leaves no traceable origin, he said, anyone can create and share a deepfake, and campaigns may not even know where it started.

Deepfake regulations remain limited nationwide

Georgia lawmakers have moved to ban deceptive AI campaign materials. A bill commonly referred to as SB9 advanced in 2025 and would make it a crime to knowingly publish certain AI-generated campaign materials without required disclosures within 90 days of an election. The measure carries penalties that can escalate depending on intent and repeat offenses.

But Georgia is still in the early wave of states attempting to regulate the technology. In 2019, several states began passing legislation to address deceptive political deepfakes specifically tied to elections.

According to the National Conference of State Legislatures, in 2025, at least half of U.S. states enacted laws dealing with deepfakes, especially in elections, nonconsensual sexual imagery, and impersonation. All 50 states, D.C., Puerto Rico, and the Virgin Islands considered AI-related bills last year. And 38 states enacted around 100 AI measures, with more expected in 2026.

Dicks said the rapid rise of generative AI means states will need much stricter rules, including permanent watermarks and clear disclosures, to prevent widespread confusion.

"If a campaign uses AI, they should tell voters up front," he said. "Right now, people don't know what's real. And when they're confused, they may not vote at all."

A sign of what's coming

Collins' campaign ad is illustrative: without clear, enforceable rules, more campaigns and outside actors could use AI-generated media to influence voters.

"Eventually, every candidate will be a victim of this," Dr. Dicks said. "Without strong regulations, no one is safe from being impersonated."

A 2023 poll from the AP-NORC Center for Public Affairs Research and the University of Chicago Harris School of Public Policy found that nearly six in 10 adults (58%) believe AI tools, which can micro-target voters, mass-produce persuasive content, and generate realistic fake images and videos in seconds, would increase the spread of false and misleading information during the presidential election. By comparison, 6% said AI will reduce misinformation, while about one-third said it will make little difference.

AI-generated political content was widely available online ahead of the 2024 election. President Donald Trump shared AI-manipulated videos with his followers, including a distorted clip of CNN host Anderson Cooper on Truth Social. The Republican National Committee released a digitally warped ad depicting former President Joe Biden with the words, "What if the weakest president we've ever had was re-elected?" shortly after Biden announced his reelection bid.

Other examples include doctored videos of Biden appearing to attack transgender people, AI-generated images falsely showing children learning satanism in libraries, and manipulated images of Trump's mug shot before it was officially released. Some creators acknowledged the false origins of their AI content, but the viral reach underscores the challenge facing voters.

Georgia's 2026 Senate race is showing just how quickly artificial intelligence could change the rules of campaigning. Voters may soon be forced to navigate a digital minefield of manipulated images and voices, deciding not only who to support but what to believe.