The risks and promise of artificial intelligence, according to the "Godfather of AI" Geoffrey Hinton

The man who helped set today's AI advancements in motion wants governments, companies and developers to carefully consider the best ways to safely advance the technology.

Geoffrey Hinton, who has been called the "Godfather of Artificial Intelligence," retired from Google earlier this year.

Hinton believes AI has the potential for good and harm. He said now is the moment to run experiments to understand AI and pass laws to ensure the technology is ethically used.

"It may be we look back and see this as a kind of turning point when humanity had to make the decision about whether to develop these things further and what to do to protect themselves if they did," Hinton said. "I don't know. I think my main message is there's enormous uncertainty about what's gonna happen next. These things do understand. And because they understand, we need to think hard about what's going to happen next. And we just don't know."

Why Hinton is worried about AI

AI has the potential to one day take over from humanity, Hinton warned.

"I'm not saying it will happen," he said. "If we could stop them ever wanting to, that would be great, but it's not clear we can stop them ever wanting to."

Right now, Hinton believes that AI is intelligent and that the systems can understand and reason, though not as well as humans. In five years' time, Hinton thinks there's a good chance AI models like ChatGPT may be able to reason better than people can.

Sam Altman, the CEO of OpenAI, the company that developed ChatGPT, warned at a May Senate hearing that artificial intelligence technology could "go quite wrong." He said he wants to work with the government to prevent that from happening.

Hinton believes AI will bring about increased productivity and efficiency, but he worries about the potential risk that many people could lose their jobs to artificial intelligence and there may not be enough jobs to replace those that are lost.

Hinton also worries about AI-powered fake news, how a biased AI could harm people searching for jobs, law enforcement's use of the technology and autonomous battlefield robots.

"You should definitely have quite a lot of awe and you should have a little tiny bit of dread, because it's best to be careful with things like this," Hinton said.

Hinton said he believes AI systems will eventually have self-awareness and consciousness.

"I think we're moving into a period when, for the first time ever, we may have things more intelligent than us," he said.

AI may already be better at learning than the human mind, Hinton said. Currently, the biggest chatbots have about a trillion connections, but the human brain has about 100 trillion.

"And yet, in the trillion connections in a chatbot, it knows far more than you do in your hundred trillion connections, which suggests it's got a much better way of getting knowledge into those connections," Hinton said.

AI systems are already writing computer code.

"One of the ways in which these systems might escape control is by writing their own computer code to modify themselves," Hinton said. "And that's something we need to seriously worry about."

AI's path forward

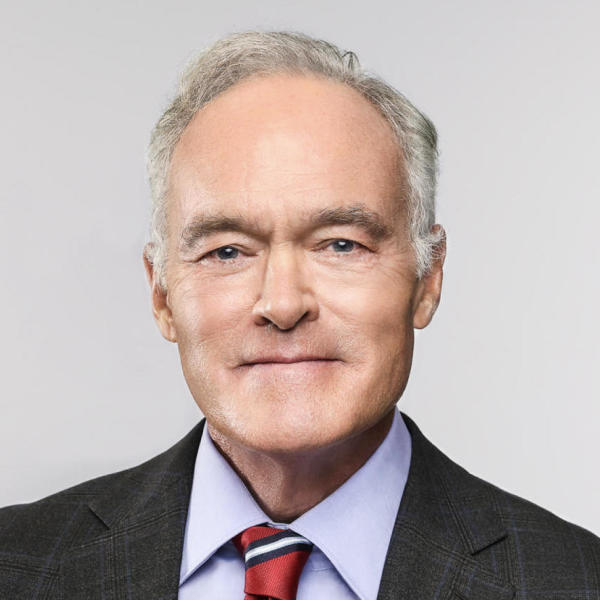

Given his fears about AI's potential risks, it would be easy to assume that Hinton regrets setting the technology in motion, but he told 60 Minutes correspondent Scott Pelley he has no regrets, in part because of AI's enormous potential for good.

"So an obvious area where there's huge benefits is health care," he said. "AI is already comparable with radiologists at understanding what's going on in medical images. It's going to be very good at designing drugs. It already is designing drugs. So that's an area where it's almost entirely going to do good. I like that area."

In an April interview with 60 Minutes, Google CEO Sundar Pichai said the company was releasing its AI advancements in a responsible way. He said society needs to adapt quickly and come up with regulations for AI in the economy, along with laws to punish abuse.

"This is why I think the development of this needs to include not just engineers, but social scientists, ethicists, philosophers and so on," Pichai told 60 Minutes. "And I think we have to be very thoughtful. And I think these are all things society needs to figure out as we move along. It's not for a company to decide."

Hinton told Pelley he wasn't sure there was a path forward that guarantees safety for humanity.

"We're entering a period of great uncertainty, where we're dealing with things we've never dealt with before," Hinton said. "And normally, the first time you deal with something totally novel, you get it wrong. And we can't afford to get it wrong with these things."